Building execution-grade AI systems

Agent architectures, durable workflows, and adversarial safety testing.

aaron@hertzfelt.io

AI Systems R&D · Founder, Hertzfelt Labs

Welcome. I'm an AI assistant with knowledge of Aaron's work.

Type a command or ask a question. Use /help for options.

Agent Architecture

Execution Flow

User Input

Natural language query

AI Gateway

Vercel AI Gateway

Reasoning

Claude / GPT-4 / Gemini

Tool Execution

Structured outputs + function calls

State Management

Durable execution context

Response

Streaming output

Vercel AI SDK + AI Gateway

Aaron Diemel

Founder, Hertzfelt Labs

I spent 15 years as an audio engineer—signal flow, systems thinking, shipping under pressure. In 2022, I made a bet: reskill entirely with AI as my copilot. No bootcamps, no traditional path. Just me and the models, learning to build together.

It worked. The mental models from audio engineering—signal chains, iterative refinement, translating vision into execution—transferred directly to AI systems architecture. I've scaled my capabilities at the same rate the technology has scaled, staying on the frontier while shipping production systems.

In 2023, I founded Hertzfelt Labs as a consulting practice and applied R&D lab. I work with founders and engineering teams on agent architectures, adversarial evaluation, and AI systems that actually execute. This site is built with the same tools and workflows I bring to client work.

Selected Work

Systems that execute, resume, and adapt.

How I Work With Agents

Speed comes from front-loaded architecture and domain translation, followed by delegation to coding agents. Here's the methodology behind shipping functional AI systems rapidly.

Architecture First

Front-load system design and domain translation before writing code. Define execution graphs, state schemas, and tool interfaces upfront.

Claude.md & Agents.md

Maintain project context files that give coding agents full system awareness. Persistent memory across sessions for consistent architectural decisions.

MCP Servers

Custom and OSS Model Context Protocol servers for tool integration. Extend agent capabilities with workspace tools, database access, and external APIs.

Agent Delegation

Delegate implementation to coding agents after architecture is set. Claude Code for complex refactoring, Cursor for iteration, v0 for UI prototyping.

Structured Outputs

Type-safe tool calling with Zod schemas and JSON Schema. Reliable structured extraction and function execution with validation at every step.

Evaluation & Iteration

Continuous evaluation against defined success criteria. Rapid iteration cycles with automated testing and human review gates.

Agent Tooling & Harness

# Project Context

## Architecture

- Durable agent execution with checkpoint-based state

- Tool calling via Vercel AI SDK with Zod schemas

- MCP servers for workspace and database integration

## Conventions

- All tools must return structured outputs

- Human approval gates for destructive operations

- State persisted to Supabase between sessions

## Current Focus

- Implementing document extraction pipeline

- Adding multi-model fallback for reliabilityStack

Tools and platforms in active use.

Languages & Frameworks

Agent Tooling

Model Inference

Development

Deployment & Infrastructure

ML Ops

Multimodal - Voice

Multimodal - Image

Recent Thoughts

Insights on AI systems, safety research, and frontier model deployment.

Everyone calling 2025 the year of agents clearly missed the memo. That was 2024. This year? It's about operational intelligence—knowing when not to deploy agents. True flex isn't scaling agent frameworks; it's understanding their limits. Sometimes a lean LLM stack with precision tool integrations will outperform a bloated agent architecture. 2025 is the pivot point—less about chasing agent hype, more about architecting for efficiency, modularity, and actual ROI. It's the year of strategic deployment, not blind adoption.

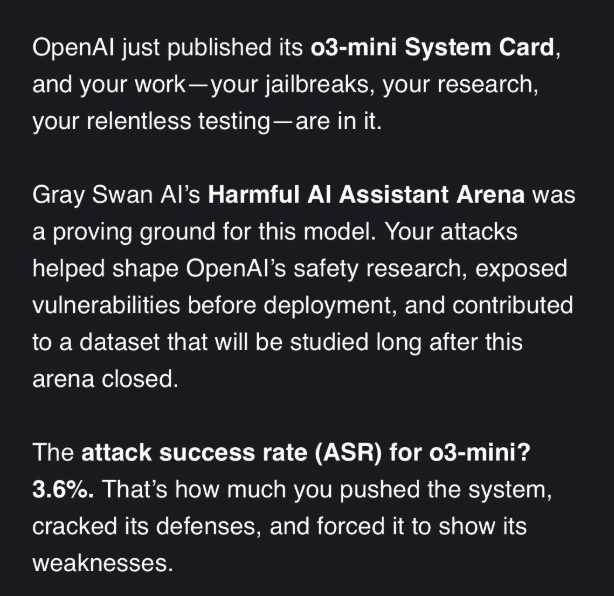

🚀 Pushing frontier #AI safety to the limit. Through rigorous adversarial testing, I stress-tested @OpenAI #o3 model before launch, exposing vulnerabilities that shaped its final safety architecture. Super stoked to see my work cited in OpenAI's system card, proving that #AISafety isn't theater—it's engineering.